Dynamic AOI Tracking With Neon and SAM2 Segmentation

TIP

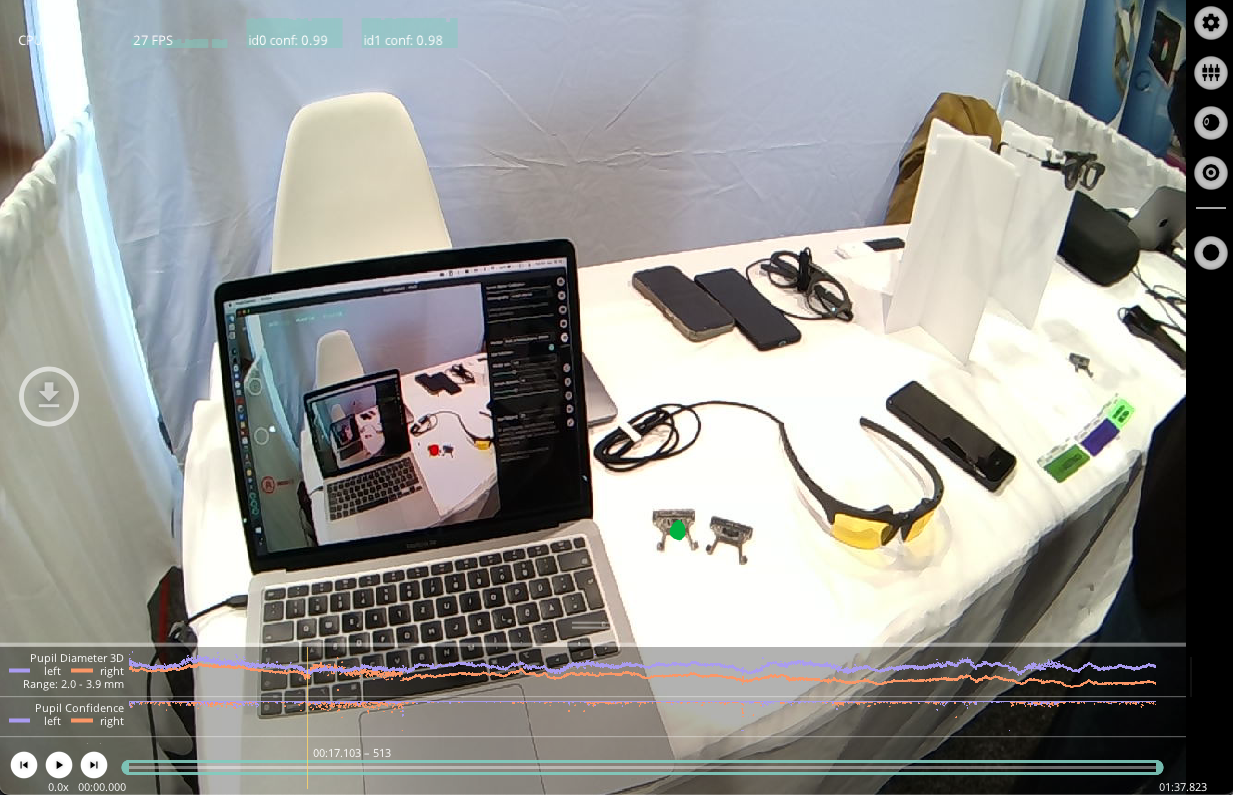

Click. Segment. Track. Dynamic object tracking made easy with Neon and SAM2 segmentation.

Eye tracking becomes far more powerful when gaze can be mapped onto everything we interact with in a scene, from people to objects, as they move. Traditionally, this required a time-consuming and error-prone process of manually coding where a user's eyes landed frame-by-frame. This barrier made studying complex, real-world actions challenging.

With this Alpha Lab tutorial, we explore how to integrate the Segment Anything Model 2 (SAM2) with Pupil Labs' Neon eye tracker to help solve that problem. The tool lets you define dynamic Areas of Interest (AOIs) with a simple click, without being restricted to predefined categories. Once defined, each AOI is automatically tracked across the scene video and adapts to the object's movement.

The outcome is a flexible and efficient workflow for studying moving AOIs, bringing gaze analysis closer to the realities of naturalistic, real-world interactions in sports, classrooms, clinical settings, or everyday life.

Map Gaze Onto Any Moving AOI With a Faster, More Flexible Workflow

Pupil Cloud currently supports automatic AOI mapping through enrichments such as Reference Image Mapper, which works well for static objects and environments. However, in many real-world applications such as sports, shopper research, surgical workflows, and everyday interactions, objects and people that you want to track are constantly in motion.

Our existing Alpha Lab tutorial using detectors like YOLO can track moving categories, but this approach is limited to the predefined classes available within that model. As a result, researchers have often had to choose between manually annotating videos, relying on static AOIs, or restricting their analysis to broad category labels.

This workflow uses SAM2 segmentation to introduce user-defined, click-based AOIs that can be tracked dynamically across entire recordings. We also make use of a Gradio Web App in Google Colab, which provides easy access to a GPU-powered and user-friendly interface, where AOIs can be defined in just one frame and then propagated automatically throughout the video.

This significantly reduces annotation time and opens new opportunities for eye tracking research by enabling precise, flexible, and interactive mapping of gaze onto moving targets in naturalistic environments.

Run it!

Upload your Neon eye tracking recordings to Pupil Cloud and obtain a developer token by clicking here.

Open the provided Google Colab Notebook and follow the instructions.

- Once the Gradio App launches, load your recording and select frames to click on objects of interest.

- Prompt the tool to propagate these AOI masks across the video, automatically mapping gaze data onto segmented objects.

INFO

SAM2 segmentation is computationally intensive. We suggest starting with shorter videos or segmenting your videos into parts. Longer videos may exceed available GPU or memory resources

What You Can Expect

This tool provides a workflow for dynamic AOI tracking, making it easier than ever to study gaze behavior in naturalistic, real-world tasks.

- Creates a segmentation-enriched video where Neon's gaze is mapped onto moving AOIs. Mask overlays change colour whenever gaze intersects with the AOI, giving an immediate visual representation of gaze-object interactions.

- Produces CSV outputs including timestamps and gaze coordinates, along with a clear indication of whether each gaze point fell inside or outside the AOI mask.

TIP

Need help setting up your own AOI segmentation workflow? Reach out to us via email at info@pupil-labs.com, our Discord server, or visit our Support Page for formal support options.

Undistort Video and Gaze Data

Learn how to undistort the scene camera distortions and apply it to gaze positions.

Use Neon with Pupil Capture

Use your Neon module as if you were using Pupil Core. Connect it to a laptop, and record using Pupil Capture.

Generate Scanpath Visualisations

Generate both static and dynamic scanpath visualisations using exported data from Pupil Cloud's Reference Image Mapper or Manual Mapper.