Build Gaze-Contingent Assistive Applications

TIP

Imagine a world where transformative assistive solutions enable you to browse the internet with a mere glance or write an email using only your eyes. This is not science fiction; it is the realm of gaze-contingent technology.

Hacking the Eyes With Gaze Contingency

'Gaze contingency' refers to a type of human–computer interaction where interfaces or display systems adjust their content based on the user's gaze. It's commonly used for assistive applications as it enables people to interact with a computer or device using their eyes instead of a mouse or keyboard, like in the video above. This is particularly valuable for individuals with physical disabilities and offers new opportunities for communication, education, and overall digital empowerment.

Limitations and Current Prospects

Gaze-contingent assistive technologies have become much easier to use recently thanks to advancements in the field of eye tracking. Traditional assistive systems require frequent calibration, which can be problematic in practice, as highlighted in the story of Gary Godfrey. Modern calibration-free approaches like Neon overcome this issue and provide a more robust and user-friendly input modality for producing gaze data.

We have prepared a guide to aid you in creating your very own gaze-contingent assistive applications using Neon.

How To Use a Head-Mounted Eye Tracker for Screen-Based Interaction

Mapping Gaze To Screen

Neon is a wearable eye tracker that provides gaze data in scene camera coordinates, i.e., relative to its forward-facing camera. We therefore need to transform gaze from scene-camera to screen-based coordinates in real time, such that the user can interact with the screen. Broadly speaking, we need to locate the screen, send gaze data from Neon to the computer, and map gaze into the coordinate system of the screen.

To locate the screen, we use AprilTags to identify the image of the screen as it appears in Neon’s scene camera. Gaze data is transferred to the computer via Neon's Real-time API. We then transform gaze from scene camera to screen-based coordinates using a homography approach like the Marker Mapper enrichment we offer in Pupil Cloud as a post-hoc solution. The heavy lifting of all this is handled by our Real-time Screen Gaze package (written for this guide).

Gaze-Controlling a Mouse

The second challenge is using screen-mapped gaze to control an input device, e.g., a mouse. To demonstrate how to do this, we wrote the Gaze-controlled Cursor Demo. This demo leverages the Real-time Screen Gaze package to obtain gaze in screen-based coordinates, and then uses that to control a mouse in a custom browser window, as shown in the video above. A simple dwell-time filter implemented in the demo enables mouse clicks when gaze hovers over different elements of the browser.

It’s Your Turn…

Follow the steps in the next section to be able to use your gaze to navigate a website and fixate on different parts to trigger a mouse click with your eyes.

Steps

- Follow the instructions in Gaze-controlled Cursor Demo to download and run it locally on your computer.

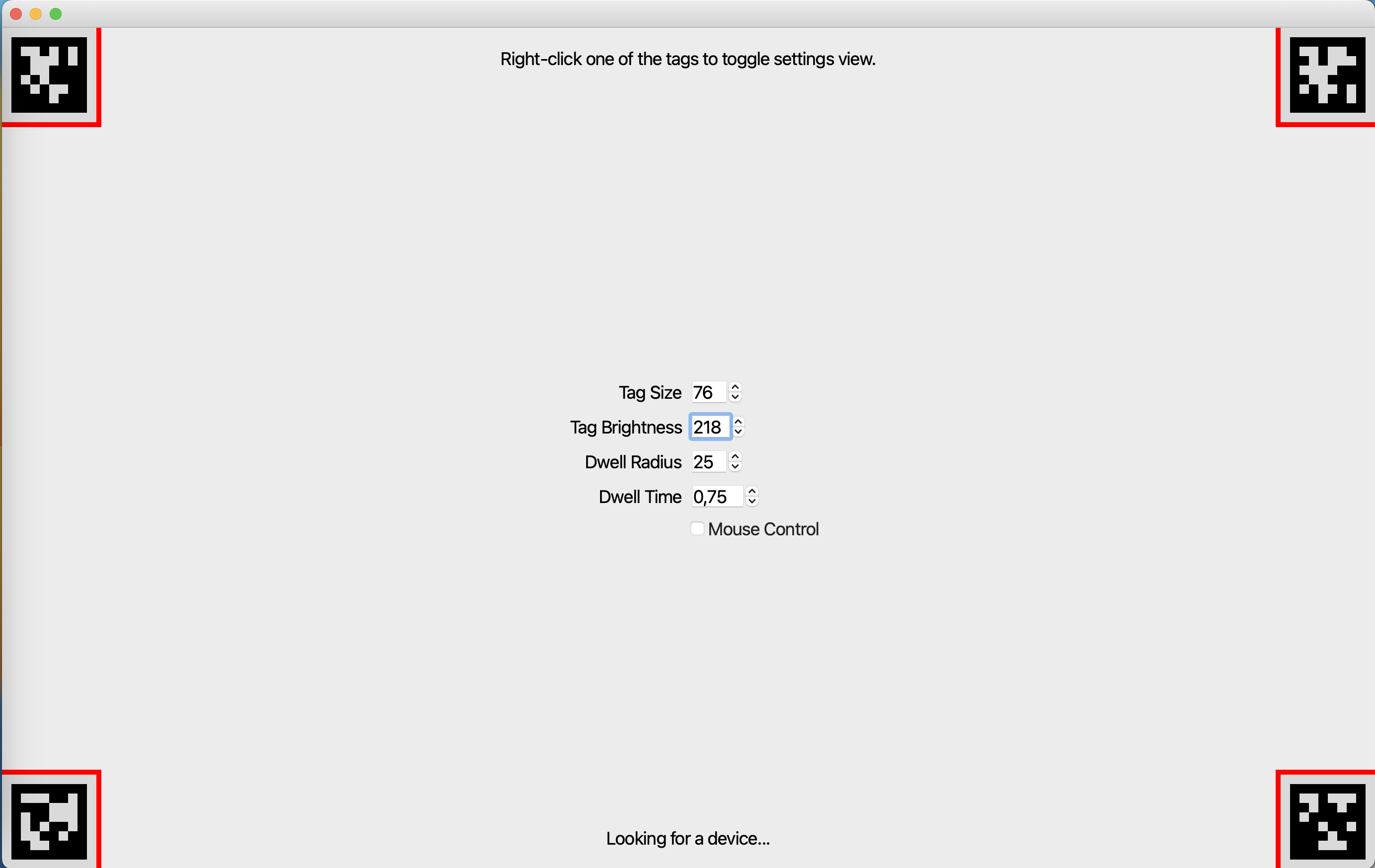

- Start up Neon, make sure it’s detected in the demo window, then check out the settings:

- Adjust the

Tag SizeandTag Brightnesssettings as necessary until all four AprilTag markers are successfully tracked (markers that are not tracked will display a red border as shown in the image below). - Modify the

Dwell RadiusandDwell Timevalues to customize the size of the gaze circle and the dwell time required for gaze to trigger a mouse action. - Click on

Mouse Controland embark on your journey into the realm of gaze contingency. - Right-click anywhere in the window or on any of the tags to show or hide the settings window.

- Adjust the

What’s Next?

The packages we created contain code that you can build on to fashion your own custom implementations, opening up possibilities for navigation, typing on a virtual keyboard, and much more.

Dig in and hack away. The potential is boundless. Let us know what you build!

TIP

Need assistance in implementing your gaze-contingent task? Reach out to us via email at info@pupil-labs.com or on our Discord server or visit our Support Page for dedicated support options.

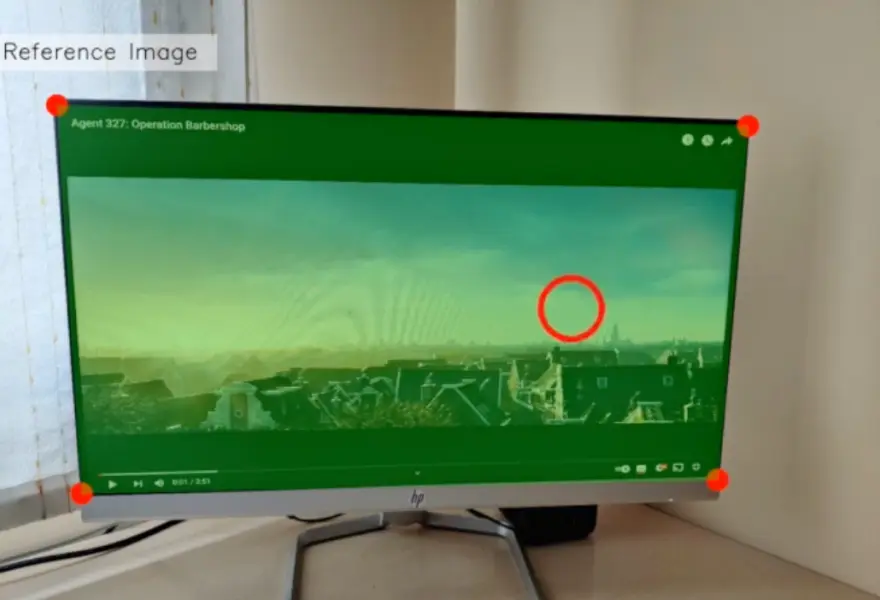

Map Gaze Onto Dynamic Screen Content

Map and visualise gaze onto a screen with dynamic content, e.g. a video, web browsing, or other, using Pupil Cloud's Reference Image Mapper and screen recording software.

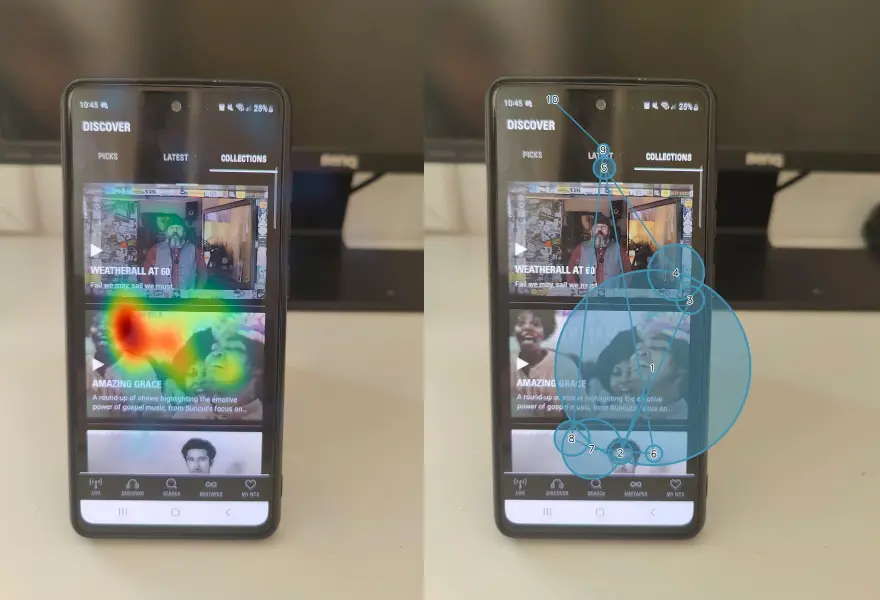

Uncover Gaze Behaviour on Phones

Capture and analyze users' viewing behaviour when focusing on small icons and features of mobile applications using Neon eye tracking alongside existing Cloud and Alpha Lab tools.

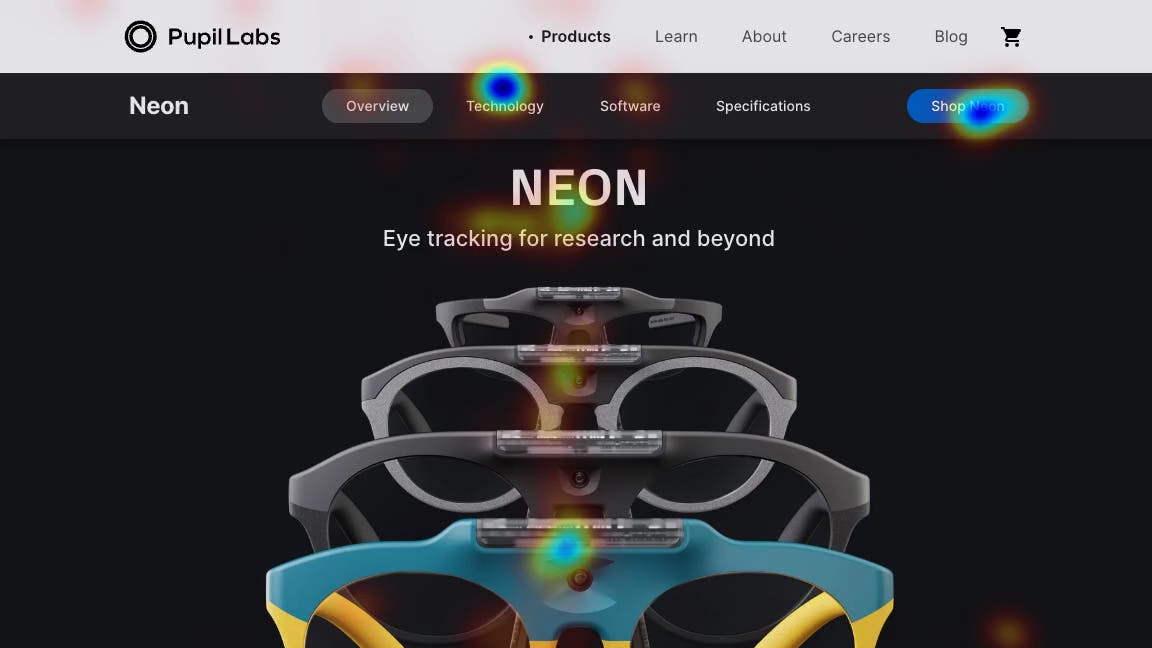

Map Gaze Onto Website AOIs

Define areas of interest on a website and map gaze onto them using our Web-AOI tool.